Transport Layer Security (TLS) or, as it was known in the beginnings of the Internet, Secure Sockets Layer (SSL) is the technology responsible for securing communications between different devices. It is used everyday by nearly everyone using the globe-spanning network.

Let’s take a closer look at how TLS is used by servers that underpin the World Wide Web and how the promise of security is actually executed.

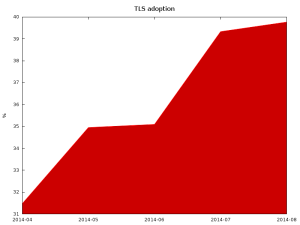

Adoption

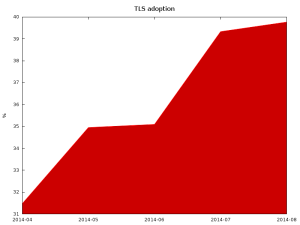

Hyper Text Transfer Protocol (HTTP) in versions 1.1 and older make encryption (thus use of TLS) optional. Given that the upcoming HTTP 2.0 will require use of TLS and that Google now uses the HTTPS in its ranking algorithm, it is expected that many sites will become TLS-enabled.

Surveying the Alexa top 1 million sites, most domains still don’t provide secure communication channel for their users.

Just under 40% of HTTP servers support TLS or SSL and present valid certificates.

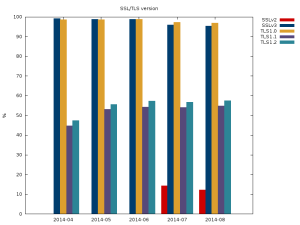

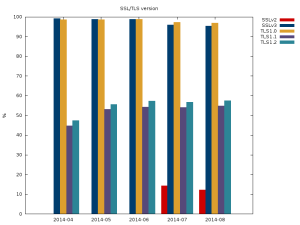

Additionally, if we look at the version of the protocol supported by the servers most don’t support the newest (and most secure) version of the protocol TLSv1.2. Of more concern is the number of sites that support the completely insecure SSLv2 protocol.

Only half of HTTPS servers support TLS 1.2

(There are no results for SSLv2 for first 3 months because of error in software that was collecting data.)

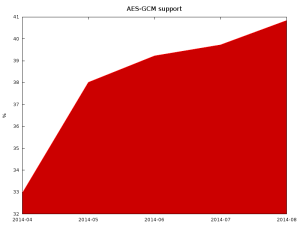

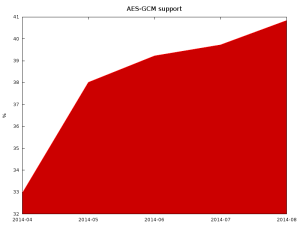

One of the newest and most secure ciphers available in TLS is Advanced Encryption Standard (AES) in Galois/Counter Mode (AES-GCM). Those ciphers provide good security, resiliency against known attacks (BEAST and Lucky13), and very good performance for machines with hardware accelerators for them (modern Intel and AMD CPUs, upcoming ARM).

Unfortunately, it is growing a bit slower than TLS adoption in general, which means that some of the newly deployed servers aren’t using new cryptographic libraries or are configured to not use all of their functions.

Only 40% of TLS web servers support AES-GCM ciphersuites.

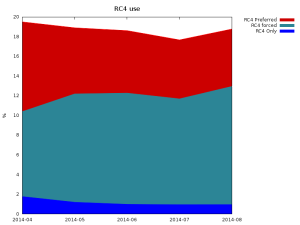

Bad recommendations

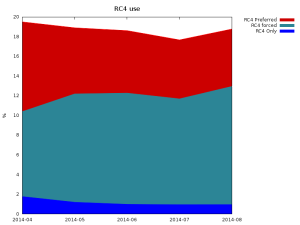

A few years back, a weakness in TLS 1.0 and SSL 3 was shown to be exploitable in the BEAST attack. The recommended workaround for it was to use RC4-based ciphers. Unfortunately, we later learned that the RC4 cipher is much weaker than it was previously estimated. As the vulnerability that allowed BEAST was fixed in TLSv1.1, using RC4 ciphers with new protocol versions was always unnecessary. Additionally, now all major clients have implemented workarounds for this attack, which currently makes using RC4 a bad idea.

Unfortunately, many servers prefer RC4 and some (~1%) actually support only RC4. This makes it impossible to disable this weak cipher on client side to force the rest of servers (nearly 19%) to use different cipher suite.

RC4 is still used with more than 18% of HTTPS servers.

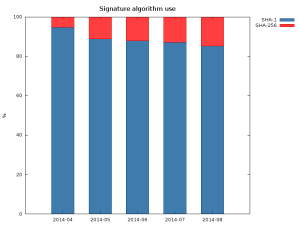

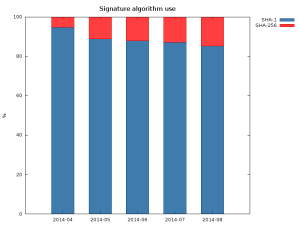

The other common issue, is that many certificates are still signed using the obsolete SHA-1. This is mostly caused by backwards compatibility with clients like Windows XP pre SP2 and old phones.

SHA-256 certificates only recently started growing in numbers

The sudden increase in the SHA-256 between April and May was caused by re-issuance of certificates in the wake of Heartbleed.

Bad configuration

Many servers also support insecure cipher suites. In the latest scan over 3.5% of servers support some cipher suites that uses AECDH key exchange, which is completely insecure against man in the middle attacks. Many servers also support single DES (around 15%) and export grade cipher suites (around 15%). In total, around 20% of servers support some kind of broken cipher suite.

While correctly implemented SSLv3 and later shouldn’t allow negotiation of those weak ciphers if stronger ones are supported by both client and server, at least one commonly used implementation had a vulnerability that did allow for changing the cipher suite to arbitrary one commonly supported by both client and server. That’s why it is important to occasionally clean up list of supported ciphers, both on server and client side.

Forward secrecy

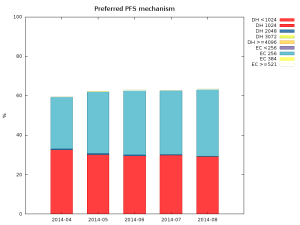

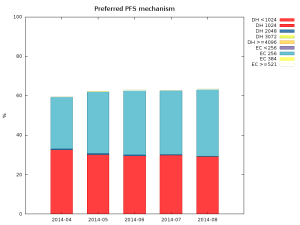

Forward secrecy, also known as perfect forward secrecy (PFS), is a property of a cipher suite that makes it impossible to decrypt communication between client and server when the attacker knows the server’s private key. It also protects old communication in case the private key is leaked or stolen. That’s why it is such a desirable property.

The good news is that most servers (over 60%) not only support, but will actually negotiate cipher suites that provide forward secrecy with clients that support it. The used types are split essentially between 1024 bit DHE and 256 bit ECDHE, scoring respectively 29% and 33% of all servers in latest scan. The amount of servers that do negotiate PFS enabled cipher suites is also steadily growing.

PFS support among TLS-enabled HTTP servers

Summary

Most Internet facing servers are badly configured, sometimes it is caused by lack of functionality in software, like in case of old Apache 2.2.x releases that don’t support ECDHE key exchange, and sometimes because of side effects of using new software with old configuration (many configuration tutorials suggested using !ADH in cipher string to disable anonymous cipher suites, that unfortunately doesn’t disable anonymous Elliptic Curve version of DH – AECDH, for that, use of !aNULL is necessary).

Thankfully, the situation seems to be improving, unfortunately rather slowly.

If you’re an administrator of a server, consider enabling TLS. Performance issues when encryption was slow and taxing on servers are long gone. If you already use TLS, double check your configuration preferably using the Mozilla guide to server configuration as it is regularly updated. Make sure you enable PFS cipher suites and put them above non-PFS ciphers and that you as well as the Certificate Authority you’ve chosen, use modern crypto (SHA-2) and large key sizes (at least 2048 bit RSA).

If you’re a user of a server and you’ve noticed that the server doesn’t use correct configuration, try contacting the administrator – he may have just forgotten about it.