Executive Summary: The IKE daemons in RHEL7 (libreswan) and RHEL6 (openswan) are not vulnerable to the SLOTH attack. But the attack is still interesting to look at .

The SLOTH attack released today is a new transcript collision attack against some security protocols that use weak or broken hashes such as MD5 or SHA1. While it mostly focuses on the issues found in TLS, it also mentions weaknesses in the “Internet Key Exchange” (IKE) protocol used for IPsec VPNs. While the TLS findings are very interesting and have been assigned CVE-2015-7575, the described attacks against IKE/IPsec got close but did not result in any vulnerabilities. In the paper, the authors describe a Chosen Prefix collision attack against IKEv2 using RSA-MD5 and RSA-SHA1 to perform a Man-in-the-Middle (MITM) attack and a Generic collision attack against IKEv1 HMAC-MD5.

We looked at libreswan and openswan-2.6.32 compiled with NSS as that is what we ship in RHEL7 and RHEL6. Upstream openswan with its custom crypto code was not evaluated. While no vulnerability was found, there was some hardening that could be done to make this attack less dangerous that will be added in the next upstream version of libreswan.

Specifically, the attack was prevented because:

- The SPI’s in IKE are random and part of the hash, so it requires an online attack of 2^77 – not an offline attack as suggested in the paper.

- MD5 is not enabled per default for IKEv2.

- Weak Diffie-Hellman groups DH22, DH23 and DH24 are not enabled per default.

- Libreswan as a server does not re-use nonces for multiple clients.

- Libreswan destroys nonces when an IKE exchange times out (default 60s).

- Bogus ID payloads in IKEv1 cause the connection to fail authentication.

The rest of this article explains the IKEv2 protocol and the SLOTH attack.

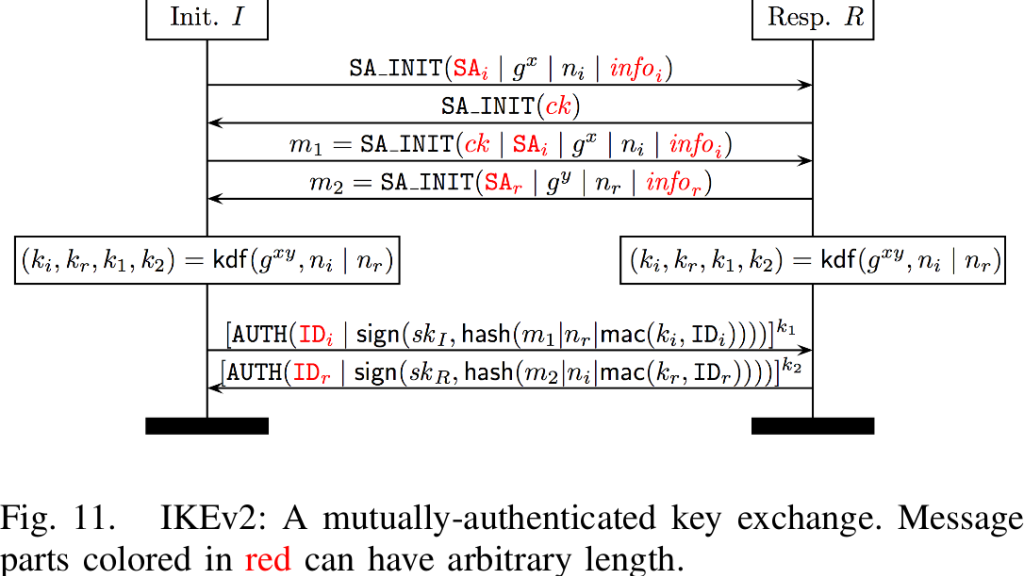

The IKEv2 protocol

The IKE exchange starts with an IKE_INIT packet exchange to perform the Diffie-Hellman Key Exchange. In this exchange, the initiator and responder exchange their nonces. The result of the DH exchange is that both parties now have a shared secret called SKEYSEED. This is fed into a mutually agreed PRF algorithm (which could be MD5, SHA1 or SHA2) to generate as much pseudo-random key material as needed. The first key(s) are for the IKE exchange itself (called the IKE SA or Parent SA), followed by keys for one or more IPsec SAs (also called Child SAs).

But before the SKEYSEED can be used, both ends need to perform an authentication step. This is the second packet exchange, called IKE_AUTH. This will bind the Diffie-Hellman channel to an identity to prevent the MITM attack. Usually these are digital signatures over the session data to prove ownership of the identity’s private key. Technically, it signs a hash of the session data. In TLS that signature is over the hash of the session data which made TLS more vulnerable to the SLOTH attack.

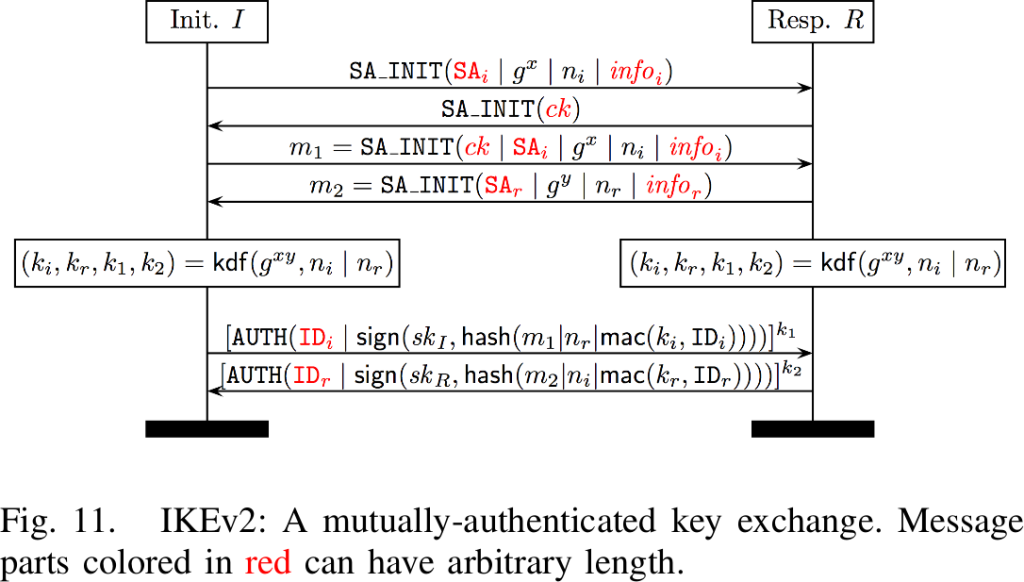

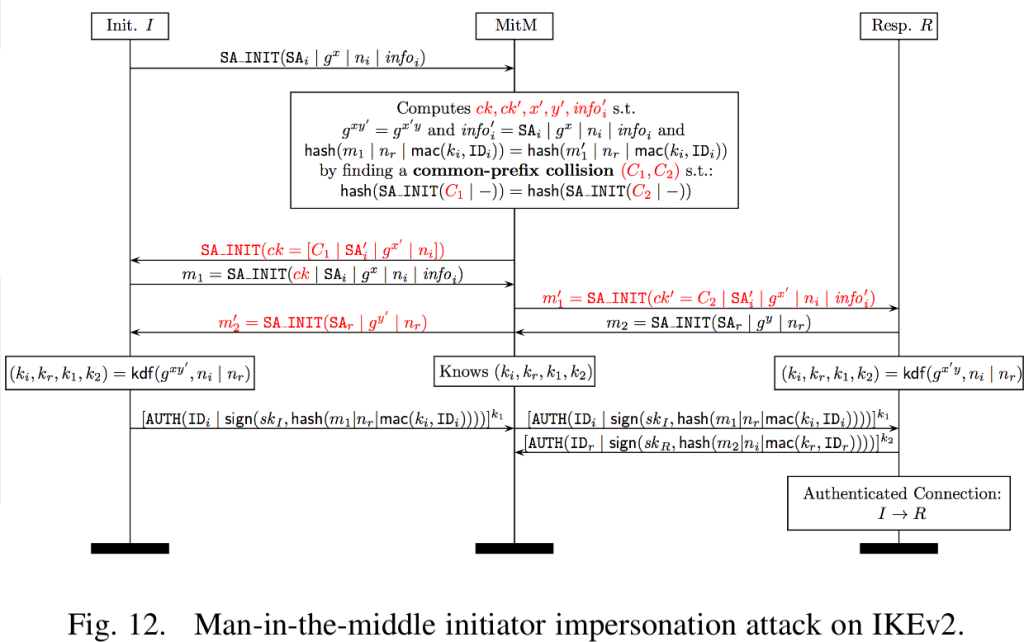

The attack is to trick both parties to sign a hash which the attacker can replay to the other party to fake the authentication of both entities.

They call this a “transcript collision”. To facilitate the creation of the same hash, the attacker needs to be able to insert its own data in the session to the first party so that the hash of that data will be identical to the hash of the session to the second party. It can then just pass on the signatures without needing to have private keys for the identities of the parties involved. It then needs to remain in the middle to decrypt and re-encrypt and pass on the data, while keeping a copy of the decrypted data.

The IKEv2 COOKIE

The initial IKE_INIT exchange does not have many payloads that can be used to manipulate the outcome of the hashing of the session data. The only candidate is the NOTIFY payload of type COOKIE.

Performing a Diffie-Hellman exchange is relatively expensive. An attacker could send a lot of IKE_INIT requests forcing the VPN server to use up its resources. These could all come from spoofed source IP addresses, so blacklisting such an attack is impossible. To defend against this, IKEv2 introduced the COOKIE mechanism. When the server gets too busy, instead of performing the Diffie-Hellman exchange, it calculates a cookie based on the client’s IP address, the client’s nonce and its own server secret. It hashes these and sends it as a COOKIE payload in an IKE_INIT reply to the client. It then deletes all the state for this client. If this IKE_INIT exchange was a spoofed request, nothing more will happen. If the request was a legitimate client, this client will receive the IKE_INIT reply, see the COOKIE payload and re-send the original IKE_INIT request, but this time it will include the COOKIE payload it received from the server. Once the server receives this IKE_INIT request with the COOKIE, it will calculate the cookie data (again) and if it matches, the client has proven that it contacted the server before. To avoid COOKIE replays and thwart attacks attempting to brute-force the server secret used for creating the cookies, the server is expected to regularly change its secret.

Abusing the COOKIE

The SLOTH attacker is the MITM between the VPN client and VPN server. It prepares an IKE_INIT request to the VPN server but waits for the VPN client to connect. Once the VPN client connects, it does some work with the received data that includes the proposals and nonce to calculate a malicious COOKIE payload and sends this COOKIE to the VPN client. The VPN client will re-send the IKE_INIT request with the COOKIE to the MITM. The MITM now sends this data to the real VPN server to perform an IKE_INIT there. It includes the COOKIE payload even though the VPN server did not ask for a COOKIE. Why does the VPN server not reject this connection? Well, the IKEv2 RFC-7296 states:

When one party receives an IKE_SA_INIT request containing a cookie whose contents do not match the value expected, that party MUST ignore the cookie and process the message as if no cookie had been included

The intention here was likely meant for a recovering server. If the server is no longer busy, it will stop sending cookies and stop requiring cookies. But a few clients that were just about to reconnect will send back the cookie they received when the server was still busy. The server shouldn’t reject these clients now, so the advice was to ignore the cookie in that case. Alternatively, the server could just remember the last used secret for a while and if it receives a cookie when it is not busy, just do the cookie validation. But that costs some resources too which can be abused by an attacker to send IKE_INIT requests with bogus cookies. Limiting the time of cookie validation from the time when the server became unbusy would mitigate this.

COOKIE size

The paper contains an error when it talks about this COOKIE size:

To implement the attack, we must first find a collision between m1 amd m’1. We observe that in IKEv2 the length of the cookie is supposed to be at most 64 octets but we found that many implementations allow cookies of up to 2^16 bytes. We can use this flexibility in computing long collisions.

It is not clear where the authors got the value of 64. The RFC does not mention anything about the maximum cookie size. The COOKIE value is sent as a NOTIFY PAYLOAD. These payloads have a two byte Payload Length value, so NOTIFY data is legitimately 2^16 (65535) bytes. Adding more bytes should not be possible. Any IKE implementation that reads more bytes than specified in the Payload Length value would be very broken. Assuming the COOKIE NOTIFY is the last payload in the packet, the attacker could increase the length specified in the IKE header and stuff additional bytes after this payload, but proper implementations would not read this data. In fact, libreswan encountered some interoperability problems when it did this by mistake when it was padding the IKE packets to a multiple of 8 bytes (as per IKEv1 but not IKEv2) and got its IKE packets rejected by various implementations. Still, the authors claim 65535 bytes is enough for their attack.

Attacking the AUTH hash

Assuming the above works, it needs to find a collision between m1 and m’1. The only numbers they claim could be feasible is when MD5 would be used for the authentication step in IKE_AUTH. An offline attack could then be computed of 2^16 to 2^39 which they say would take about 5 hours. As the paper states, IKEv2 implementations either don’t support MD5, or if they do it is not part of the default proposal set. It makes a case that the weak SHA1 is widely supported in IKEv2 but admits using SHA1 will need more computing power (they listed 2^61 to 2^67 or 20 years). Note that libreswan (and openswan in RHEL) requires manual configuration to enable MD5 in IKEv2, but SHA1 is still allowed for compatibility.

The final step of the attack – Diffie-Hellman

Assuming the above succeeds the attacker needs to ensure that g^xy’ = g^x’y. To facilitate that, they use a subgroup confinement attack, and illustrate this with an example of picking x’ = y’ = 0. Then the two shared secrets would have the value 1. In practice this does not work according to the authors because most IKEv2 implementations validate

the received Diffie-Hellman public value to ensure that it is larger than 1 and smaller than p – 1.They did find that Diffie-Hellman groups 22 to 24 are known to have many small subgroups, and implementations tend to not validate these. Which led to an interesting discussion on one of the cypherpunks mailinglists about the mysterious nature of the DH groups in RFC-5114. Which are not enabled in libreswan (or openswan in RHEL) by default, and require manual configuration precisely because the origin of these groups is a mystery.

The IKEv1 attack

The paper briefly brainstorms about a variant of this attack using IKEv1. It would be interesting because MD5 is very common with IKEv1, but the article is not really clear on how that attack should work. It mentions filling the ID payload with malicious data to trigger the collision, but such an ID would never pass validation.

Counter measures

Work was already started on updating the cryptographic algorithms deemed mandatory to implement for IKE. Note that it does not state which algorithms are valid to use, or which to use per default. This work is happening at the IPsec working group at the IETF and can be found at draft-ietf-ipsecme-rfc4307bis. It is expected to go through a few more rounds of discussion and one of the topics that will be raised are the weak DH groups specified in RFC-5114.

Upstream Libreswan has hardened its cookie handling code, preventing the attacker from sending an uninvited cookie to the server without having their connection dropped.